Introduction

A brain tumour is an abnormal mass of tissue inside the skull in which cells grow and multiply uncontrollably. Brain tumours are classified based on their speed of growth and the likelihood of them growing back after treatment. They are mainly divided into 2 overall categories: malignant and benign. Benign tumours are not cancerous, they grow slowly, and are less likely to return after treatment. Malignant tumours, on the other hand, are essentially made up of cancer cells, they have the ability to invade the tissues locally, or they can spread to different parts of the body, a process called metastasis [1]. Most patients who develop brain metastases have a known primary cancer. Brain metastases or metastatic brain tumours are the most common intracranial neoplasm in adults and are a significant cause of morbidity and mortality in patients with advanced cancer. Most brain metastases originate from lung cancer (40–50%), breast cancer (15–25%), or melanoma (5–20%), but renal cell carcinoma, colon cancer, and gynaecological malignancies also make up a significant fraction [2]. While lung cancer accounts for most brain metastases, melanoma has the highest propensity to disseminate to the brain; 50% of patients with advanced melanoma eventually develop metastatic brain disease [3]. The most common brain tumours are meningioma, glioma, and pituitary. Among these 3 tumours, meningioma is the most important primary, slow-growing brain tumour formed by the meninges – the membrane layers surrounding the brain and spinal cord [4]. On the other hand, glioma tumour accounts for 78% of malignant brain tumours and arises from the brain’s supporting cells, i.e. the glia. More specifically, glioma tumours are the result of glial cell mutations resulting in malignancy of normal cells, and they are the most common types of astrocytomas (tumour of the brain or spinal cord) [5]. The phenotypical makeup of glioma tumours can consist of astrocytomas, oligodendrogliomas, or ependymomas [6]. Another type of brain tumour is the pituitary tumour, which is caused by excessive growth of brain cells in the pituitary gland of the brain [7]. Most pituitary tumours are non-cancerous (benign). Benign pituitary gland tumours are also called pituitary adenomas or neuroendocrine tumours, according to recently revised fifth edition of the World Health Organization guidelines [8]. Brain tumours can lead to death if not treated, so early diagnosis is of utmost importance [9].

According to Global Cancer Statistics 2020, 308.1 thousand new brain cancer cases were diagnosed worldwide in 2020, and 251.3 thousand cancer-related deaths occurred [10]. The impact of brain tumours is more significant in the United States than in other countries, with approximately 86.9 thousand cases of brain tumours diagnosed in 2019 alone [11]. Magnetic resonance imaging (MRI) scanning is commonly utilized by physicians for accurate diagnosis of brain tumours without surgery [12]. In addition to producing high-resolution pictures with great contrast, MRI also has the benefit of being a radiation-free technology. For this reason, it is the preferred noninvasive imaging technique for identifying many forms of brain malignancy [13].

Nowadays, machine learning is responsible for recognizing and classifying medical imaging patterns. It provides the ability to automatically retrieve and extract knowledge from data and ensures diagnostic accuracy. Therefore, machine learning, especially Deep Learning, is a useful tool to improve performance in various medical applications in different fields, including disease prediction and diagnosis, molecular and cellular structure recognition, tissue segmentation, and image classification [14–18]. In image recognition and classification, the most successful techniques used today are convolutional neural networks (CNNs) because they have many layers and high diagnostic accuracy, especially when the number of input images is high [18, 19]. Moreover, the use of such methods paves the way for accurate and error-free identification of tumours to detect and distinguish them from other similar diseases.

In this work, GoogleNet is proposed for the automatic classification of brain tumours. Eleven other CNN methods were compared to determine if there was a remarkable difference between these methods and the proposed method in terms of performance.

Material and methods

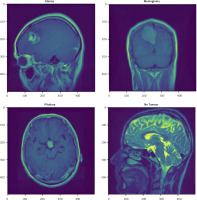

The data used in this work consist of 3264 MRI images, of which 926 are glioma images, 937 are meningiomas, 901 are pituitary gland tumours, and 500 are healthy brains [20]. Figure 1 shows examples of images of the different tumour types. The magnetic resonance imaging from this dataset had different sizes, and because these images represent the input layer of the networks, they were reduced to 100 × 100 pixels. Of the total 3264 data, 80% (2611) were used as training data, and 20% (653) were used as validation data.

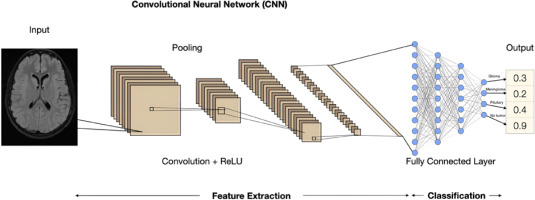

Convolutional networks, also known as neural networks, are means of processing complex data inspired by the function of human neurons and human senses [21]. They are capable of “learning” and analysing complex data sets using a series of interconnected processors and computational pathways [22]. Figure 2 shows the CNN architecture. In the CNN architecture, there are 3 types of layer, namely convolutional layers, alternating pooling layers, and fully connected layers. The last pooling layer is transformed into a one-dimensional layer with flattened layers so that it can be forwarded to the fully connected layers. Classification of data into classes was based on the Softmax activation function. In this process, batch normalization and regularization were used to avoid overfitting. The rectified linear unit function was used as an activation function. To improve performance, Adam was used as the optimization function with a learning rate of 0.001. After 100 epochs, the training process was complete. The batch size was set to 64, and each epoch took a different time depending on the neural network used. The different structures of each neural network are shown in Table 1.

Fig. 1

Different samples of magnetic resonance imaging: glioma, meningioma, pituitary tumour, and healthy brain

Table 1

Structure of convolutional neural networks

The metrics used to evaluate the performance of each neural network in classifying MRI images into glioma, meningioma, pituitary tumour, and healthy brain categories included accuracy, precision, recall, F-measure, false positive rate (FPR), and true negative rate. The equations for these metrics are shown below:

TP – true positive, TN – true negative, FP – false positive, FN – false negative

To evaluate classifiers and visualize their performance, receiver operating characteristic (ROC) and confusion matrix diagrams can be useful to describe the results. More specifically, the ROC curve is created by plotting the rate of true positives (TPR) against the FPR, where maximizing TPR and minimizing FPR are ideal outcomes. The confusion matrix allows us to see if there are confounding results or overlaps between classes. It is very important to reduce false positives and false negatives in the modelling process.

The entire process in this study was done in Keras using Tensorflow. The neural networks in this study were designed in the Jupyter environment.

Results

Table 2 shows the results of the 12 convolutional neural networks with their accuracy values as well as the duration of each epoch per neural network. As can be seen, the highest validation accuracy was found to be 97% for GoogleNet. MobileNetV2 (96.4%) and Xception (94.5%) also have high accuracy. Figure 3 shows the training progress for each CNN, i.e., the accuracy during the training and validation process. The precision, recall, and F-measure of the 4 categories obtained from the 12 CNNs are summarized in Table 3. GoogleNet has the highest accuracy (97%), recall (95%), and F-measure (96%) for classifying gliomas. For the classification of meningiomas, GoogleNet has the highest precision (98%), ResNet-50 has the highest recall (98%), and GoogleNet has the highest F-measure (97%). For the classification of pituitary glands, MobileNetV2 has the highest precision (99%), and DenseNet-BC has the highest recall (100%) and F-measure (99%). For healthy brain classification, ResNet-50 has the highest accuracy (98%), while MobileNetV2 has the highest recall (98%) and F-measure (97%).

Table 2

Accuracy score and duration time of each epoch per neural network

Table 3

Precision, recall, and F-measure of the convolutional neural networks

Figure 4 A shows the ROC curves of the CNNs with validation accuracy of more than 90%, along with the area under curve (AUC) for the 4 categories of glioma, meningioma, pituitary tumour, and healthy brain. Coversely, Figure 4 B shows the ROC curves of the CNNs with validation accuracy up to 90%. From the ROC curve, it can be seen that the AUC value is 100% and 99% for MobileNetV2, indicating the consistency of the model. Figure 5 A shows the confusion matrix of the CNNs with an accuracy of more than 90%, and the percentages of correct classification in the validation data, while Figure 5 B shows the confusion matrix of the CNNs with an accuracy of up to 90%. As can be seen from the diagonal of this matrix, GoogleNet achieves 97% validation accuracy, while MobileNetV2 achieves 96.4%. From Figure 5 A it can be seen that a total of 197, 157, and 177 MRI images are correctly classified for meningioma, glioma, and pituitary tumour, respectively, while only 19 MRI images are misclassified by the GoogleNet architecture.

Fig. 4

Receiver operating characteristic plots for the deep convolutional neural network models with validation accuracy over 90% (A)

Fig. 4. Cont.

Receiver operating characteristic plots for the deep convolutional neural network models with validation accuracy under 90% (B)

Discussion

The main goal of this study was to develop 12 deep learning networks to classify MRI images into 4 classes: glioma, meningioma, pituitary tumour, and healthy brain, and propose the network with the highest accuracy. Many researchers have tried to solve the same problem (multiclass classification of brain tumours) with different CNN models. The studies that addressed the same problem are listed in Table 4. Specifically, Gumaei et al. [23] used a hybrid feature extraction approach with a normalized extreme learning machine to classify brain tumour types. This method, feedforward neural network (FNN), achieved an accuracy of 94.23%, which is quite low compared to similar studies. Sajjad et al. [24] and Swatti et al. [25] both applied the pre-trained VGG19 model to a dataset of 3064 MRI images and achieved almost the same performance of 94.5 and 94.8, respectively. Abiwinanda et al. [26] proposed a CNN model for brain tumour classification using only 700 MRI images and achieved a classification accuracy of 84.1%, which is also quite low compared to similar studies. Sultan et al. [27] proposed a CNN architecture composed of 16 layers and achieved an accuracy of 96.13%. Anaraki et al. [28] introduced a genetic algorithm with a CNN for brain tumour prediction. The genetic algorithm, however, does not always show good accuracy when working with CNN, resulting in an accuracy of only 94.2%. Badža et al. [29] performed brain tumour detection using a CNN with 2 convolutional layers and achieved 95.40% accuracy for augmented images. However, an accuracy of 95.40% is sub-optimal compared to the results obtained with the networks used in the present study.

Table 4

Comparative analysis of proposed work with previous works

| Parameter | Accuracy score | Method |

|---|---|---|

| Gumaei et al. [16] | 94.23 | FNN |

| Sajjad et al. [17] | 94.50 | VGG-19 |

| Swati et al. [18] | 94.80 | VGG-19 |

| Abiwinanda et al. [19] | 84.10 | CNN |

| Sultan et al. [20] | 96.10 | CNN |

| Anaraki et al. [21] | 94.20 | CNN + GA |

| Badza et al. [22] | 96.56 | CNN |

| Gunasekara et al. [23] | 92.00 | CNN and AC |

| Masood et al. [24] | 95.90 | RCNN + ResNet-50 |

| Díaz-Pernas et al. [25] | 97.00 | CNN |

| Irmak [26] | 92.60 | CNN |

| Saeedi et al. [27] | 93.44 | CNN + AE |

| Proposed CNN | 97.00 | 12 CNN |

In another study, Gunasekara et al. [30] segmented the tumour region using the active contour approach, which uses energy forces and constraints to extract critical pixels from an image for further processing and interpretation. However, this method has drawbacks, such as getting stuck in local minimum states, which limited the accuracy of the method to 92%. Masood et al. [31] combined Mask region-based Convolution neural network (RCNN) with ResNet-50 model for tumour region localization and achieved 95% classification accuracy. However, more sophisticated object detection models such as the Yolo model and the Faster RCNN model perform much better than Mask RCNN. Díaz-Pernas et al. [32] and Irmak [33] both used the CNN model and achieved an accuracy of 97% and 92.6%, respectively. However, both models have drawbacks because they are computationally expensive and do not provide a method for system validation. The most recent study on brain tumour detection is by Saeedi et al. [34], who trained a new 2D CNN and a convolutional autoencoder network, which achieved an accuracy of 93.44 and 90.92, respectively, which is not optimal compared to the results of the networks used in this study.

GoogleNet has been proven to be the best choice not only for brain tumour detection and classification, but also for detection and classification of breast cancer [35], lung cancer [36, 37], colon cancer [38], cervical cancer [39], skin cancer [40], and laryngeal cancer [41]. The efficiency of the GoogleNet has been proven not only by comparing it with various CNNs but also by comparing with various state-of-the-art approaches such as support vector machine, extreme learning machine, and particle swarm optimization methods [40].

Conclusions

This study represents a significant contribution, especially in terms of the application of artificial intelligence and machine learning in brain tumour detection considering MRI images. Prediction of diseases and their progression, such as tumours, is a critical challenge in the care, treatment, and research of complex heterogeneous diseases. The significance of this study is not only that it has succeeded in achieving the highest accuracy (97%) to date in brain tumour classification, but it is also the only study to compare the performance of so many neural networks simultaneously.